Create AI applications using Hugging Face Transformers

Hugging Face 🤗 is a platform and community for machine learning and artificial intelligence. It offers various tools and resources for developers, researchers, and enthusiasts.

In simple terms, Hugging Face is the GitHub for AI.

In this article, I will teach you how transformers work in Hugging Face 🤗, so you can build any AI solution you want without needing to be an expert in this field.

But before I dig into transformers, let's see what other options are available in Hugging Face.

Please notice that you need to know the basic of Python in order to read this article.

So, let's get started.

Hugging Face Options

If you visit the Hugging Face 🤗 website, you'll notice that it has a few sections. You might be confused (as I was), so let's describe each one:

- Model Hub: A vast repository of thousands of open-source machine learning models for various tasks, like natural language processing, computer vision, and audio processing. You can easily explore, download, and use these models for your projects.

- Transformers Library: A popular open-source library that provides easy-to-use APIs for working with transformer-based models, which have become the state-of-the-art for many NLP tasks.

- Datasets: A comprehensive collection of datasets for training and evaluating machine learning models.

- Spaces: A platform for showcasing machine learning demos and applications.

- Community: A vibrant community of developers, researchers, and enthusiasts who collaborate, share ideas, and contribute to the platform.

What are transformers?

As I mentioned, I will focus only on transformers in this article because they provide numerous tasks that suit almost any need.

But what are transformers?

Transformers provides APIs and tools to easily download and train state-of-the-art pretrained models. Using pretrained models can reduce your compute costs, carbon footprint, and save you the time and resources required to train a model from scratch.

The way transformers work is pretty simple:

- You specify the task/model that you want.

- The model will be downloaded from Hugging Face 🤗 and cached into your machine.

- You run the model directly from your machine.

Amazing, isn't it? You don't need to pay, and for privacy reasons, you can interact with the model without sending your data to a third-party provider. On top of that, you can run the model offline. That's incredible. 🤯

Sentiment-analyzer using Transformers

Let's build a sentiment-analyzer service with transformers.

A sentiment analyzer is a tool that determines the emotional tone of a piece of text, classifying it as positive, negative, or neutral.

First of all, you need to install transformers package in your application:

pip install transformers

from transformers import pipeline

sentiment_pipeline = pipeline("sentiment-analysis", model="distilbert/distilbert-base-uncased-finetuned-sst-2-english")

data = ["I love you", "I hate you"]

sentiment_pipeline(data)

If you run the code, you'll get the following output:

[{'label': 'POSITIVE', 'score': 0.9998656511306763},

{'label': 'NEGATIVE', 'score': 0.9991129040718079}]

But how did that happen? And what is that fancy pipeline function?

Model Caching

When you use transformers, Hugging Face 🤗 stores the model on your computer, and any interaction with the model is done directly on your machine.

By default, Hugging Face 🤗 stores the models in the ~/.cache/huggingface/hub path. However, the path can be easily changed to something else if you prefer.

Read more about caching here.

Ok. Let's list the caching folder and see what it contains:

ls -la ~/.cache/huggingface/hub

❯ ls -la ~/.cache/huggingface/hub

total 16

drwxr-xr-x 11 ahmad staff 352 Aug 10 10:42 .

drwxr-xr-x 4 ahmad staff 128 Jun 12 20:03 ..

drwxr-xr-x 7 ahmad staff 224 Jun 14 09:17 .locks

drwxr-xr-x 6 ahmad staff 192 Jun 12 19:54 models--bigscience--T0_3B

drwxr-xr-x 6 ahmad staff 192 Jun 12 19:45 models--distilbert--distilbert-base-uncased-finetuned-sst-2-english

drwxr-xr-x 6 ahmad staff 192 Jun 14 09:18 models--facebook--bart-large-mnli

drwxr-xr-x 6 ahmad staff 192 Jun 12 19:58 models--gradientai--Llama-3-8B-Instruct-Gradient-1048k

drwxr-xr-x 3 ahmad staff 96 Aug 1 19:18 models--meta-llama--Meta-Llama-3.1-8B-Instruct

drwxr-xr-x 6 ahmad staff 192 Jun 14 09:09 models--microsoft--resnet-101

-rw-r--r-- 1 ahmad staff 1 Jun 12 20:02 version.txt

-rw-r--r-- 1 ahmad staff 1 Aug 10 10:42 version_diffusers_cache.txt

On my computer, I've downloaded 6 models from Hugging Face 🤗.

Now, if you disconnect from the internet and re-run the code, you should get the same results because the model is stored on your computer.

Pipeline

The pipeline function is a wrapper around various models, that's it.

For example, if you want to interact with a text-classification model, you'll use a pipeline for that.

The pipeline function takes a task, and the task defines which pipeline will be returned. There are numerous tasks on Hugging Face 🤗.

But how do you know what tasks are supported? 🤔

Tasks

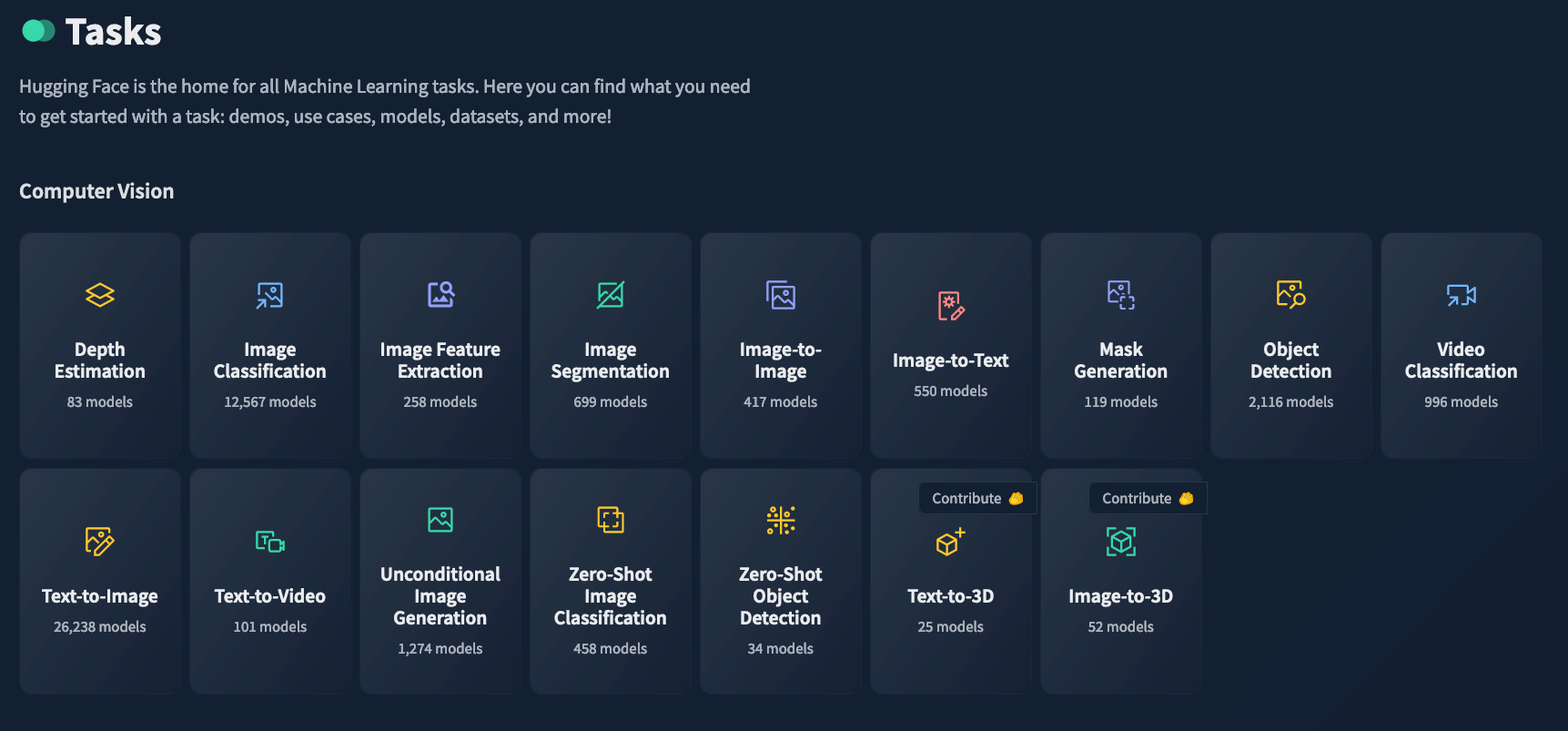

Head to Hugging Face's tasks page 🤗 and click on the category that you want, for example, Image Classification:

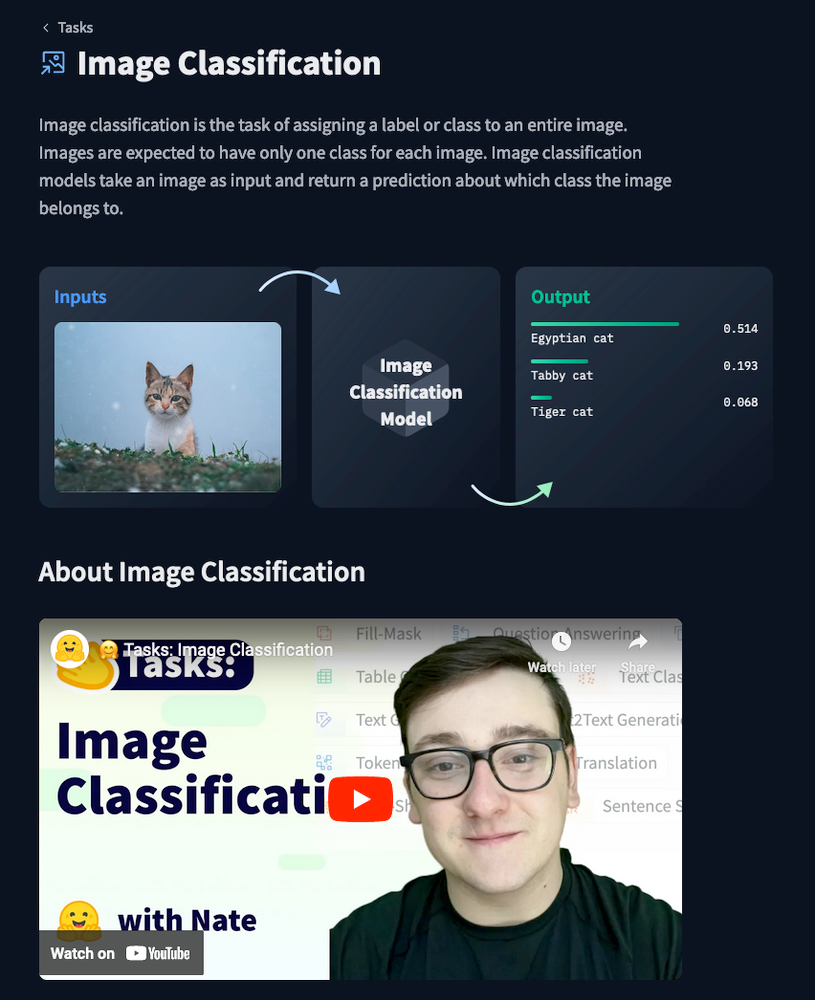

The next page shows you a brief introduction to Image Classification and how it's supposed to work:

The model parameter in the

pipelinefunction is optional. However, if you don't set it, a default model will be used. For image classification, the default model is google/vit-base-patch16-224.

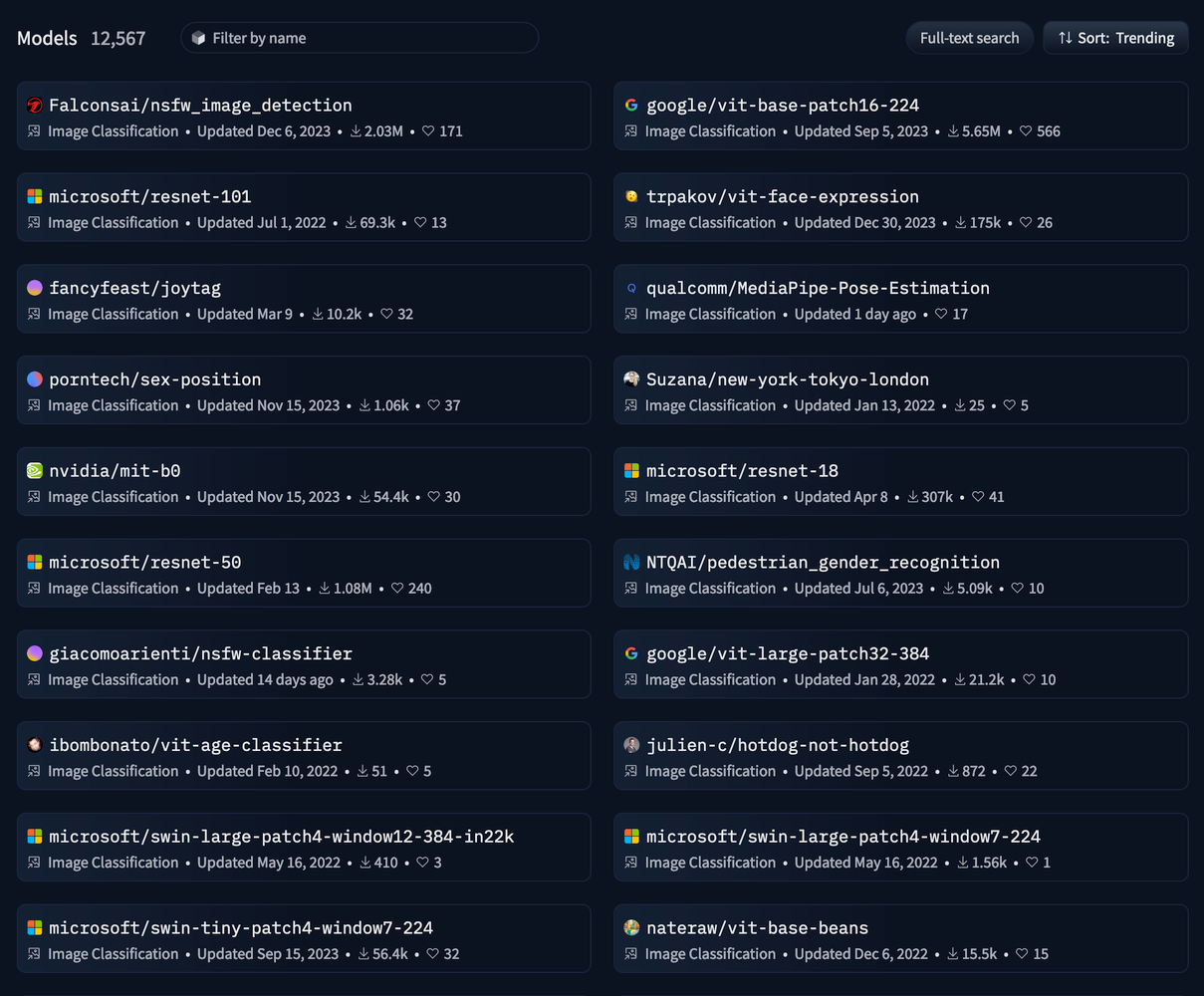

On the right-hand side, you'll see all the models related to the Image Classification task:

Click on the "Browse Models" to list all of them:

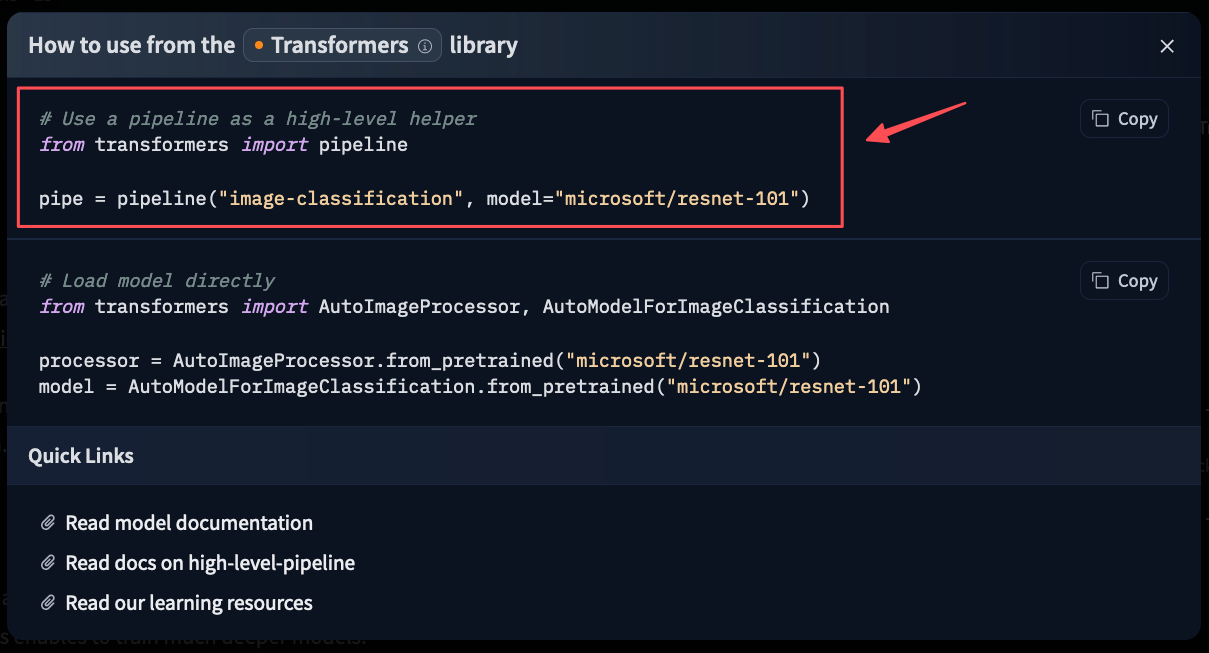

Select any of these models and go to your Python project. For example, I've chosen the microsoft/resnet-101 model.

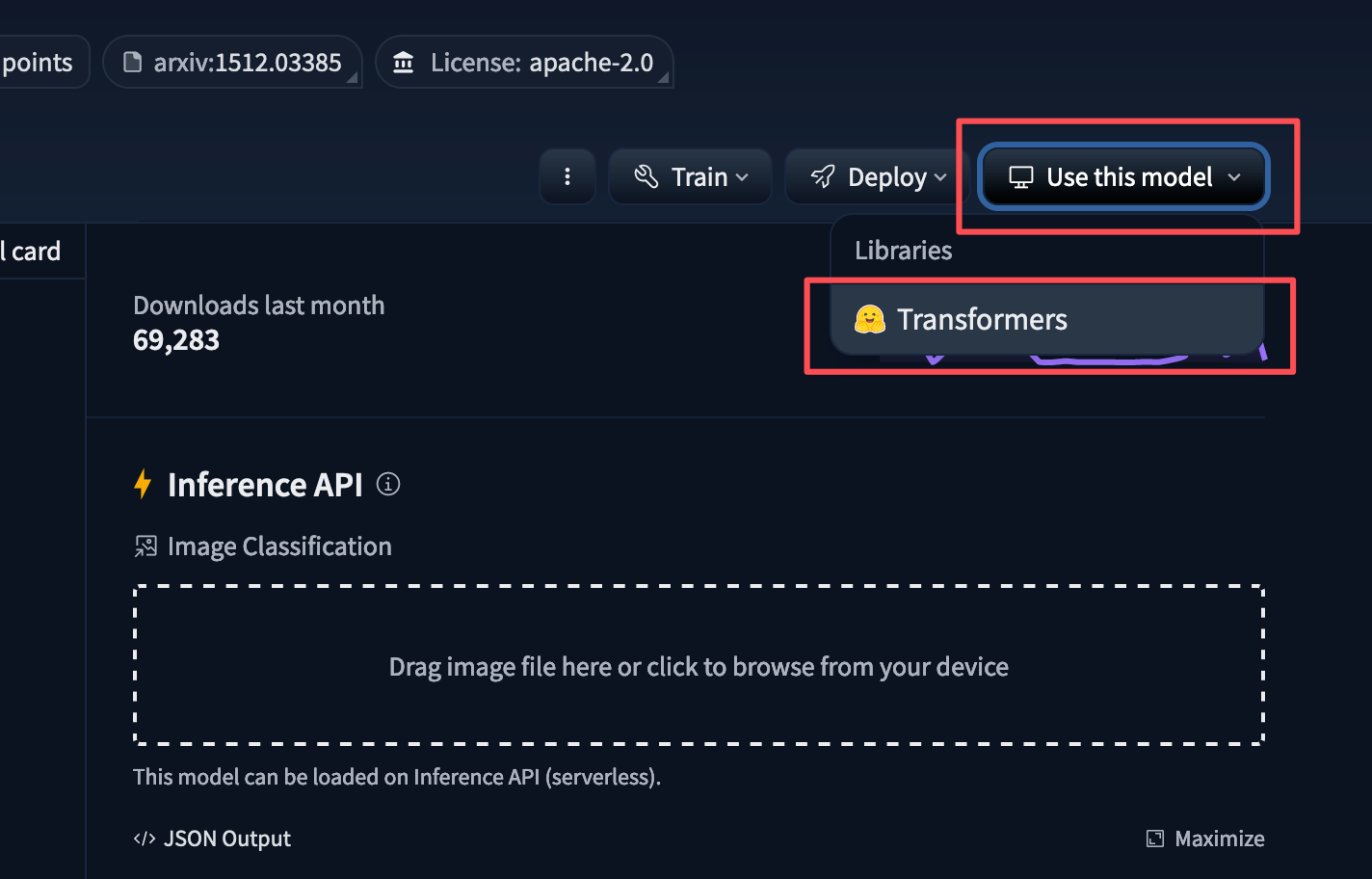

When you click on the model, it will take you to the model's page where you can see how the model can be used with transformers:

Click on "Use this model" and select "Transformers" from the drop-down menu:

You should be able to see the snippet:

Computer Vision Tasks

If you might have noticed, the snippet doesn't show the usage of the vision pipeline function for vision tasks.

For vision tasks, you can pass your images as an argument to the vision variable as follows:

from transformers import pipeline

vision = pipeline("image-classification", model="microsoft/resnet-101")

vision(images="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg")

The output should be something like this:

[{'label': 'lynx, catamount', 'score': 0.6211655139923096},

{'label': 'tabby, tabby cat', 'score': 0.05320701003074646},

{'label': 'badger', 'score': 0.02400618977844715},

{'label': 'tiger cat', 'score': 0.017786771059036255},

{'label': 'Egyptian cat', 'score': 0.014691030606627464}]

The image can be a link, a local path or a base64-encoded image.

NLP tasks

Let's see how pipeline works for NLP tasks:

from transformers import pipeline

import torch

# This model is a `zero-shot-classification` model.

# It will classify text, except you are free to choose any label you might imagine

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

classifier = pipeline(model="facebook/bart-large-mnli", device=device)

classifier(

"I have a problem with my iphone that needs to be resolved asap!!",

candidate_labels=["urgent", "not urgent", "phone", "tablet", "computer"],

)

Output:

{'sequence': 'I have a problem with my iphone that needs to be resolved asap!!',

'labels': ['urgent', 'phone', 'computer', 'not urgent', 'tablet'],

'scores': [0.503635823726654,

0.4787995219230652,

0.012600162997841835,

0.0026557876262813807,

0.0023087605368345976]}

As you see, the sentence has been classified as urgent because the first score, 0.503635823726654, is the highest.

So, the purpose of this model is to analyze the given text and check whether it matches any of the given labels.

This model is Zero-Shot Classification, which means that it can classify text into categories without needing to be explicitly trained on those categories beforehand. Instead, it leverages pre-trained knowledge to make predictions based on the provided labels and the context of the text.

Conclusion

Hugging Face 🤗 is amazing because you don't need to be an AI expert to use it. Just select the model you want and use the pipeline function—that's it.

Now, spend some time exploring it and try to build something.

Thanks for reading.